Section 7 Field Site Loggers

7.1 Introduction

This chapter is concerned with preparing the measured data for the OPTAIN field scale models. This will involve loading the data, transforming it into a usable format, and performing a quality check. The end of this chapter will result in data fit for use in SWAP as well as a tangentially related antecedent precipitation index (API) model for use in the SWAT+ modelling work, related to scheduling management operations using SWATFarmR .

7.1.1 Prerequisites

The following packages are required for this chapter:

require(readr) # for reading in data

require(dplyr) # for manipulating data

require(stringr) # for manipulating text

require(purrr) # for extracting from nested lists

require(plotly) # for diagnostic plotting

require(lubridate) # for date conversions

require(reshape2) # for data conversions

require(wesanderson) # plot colors

require(DT) # for tables

require(mapview) # for plotting

require(sf) # for shapefiles

show_rows <- 500 # number of rows to show in the tablesAs well as the following custom functions:

7.2 Data Processing

7.2.1 Loading Data

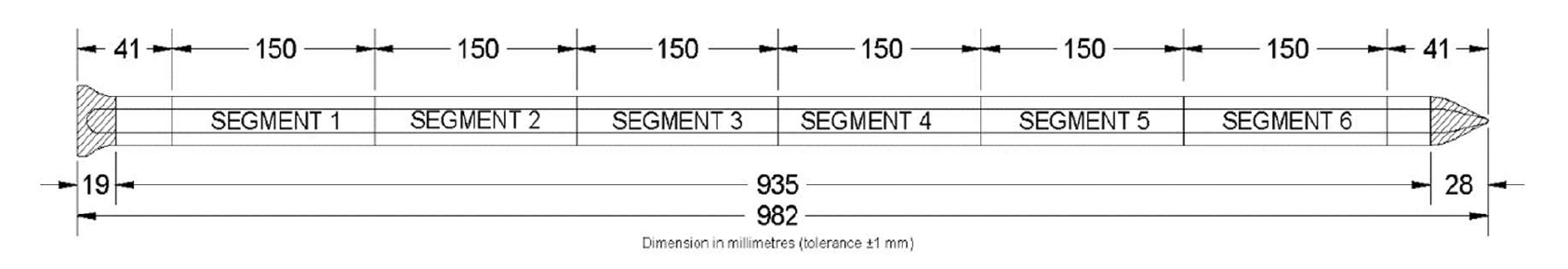

To start we need to load in our data from the GroPoint Profile data loggers. The logger is approx. 1m long and has 7 temperature sensors, and 6 soil moisture sensors located along its segments. “GroPoint Profile Multi Segment Soil Moisture & Temperature Profiling Probe Operation Manual” (2023)

Figure 7.1: GroPoint Segment Schematic (GroPoint Profile User Manual, Page 6)

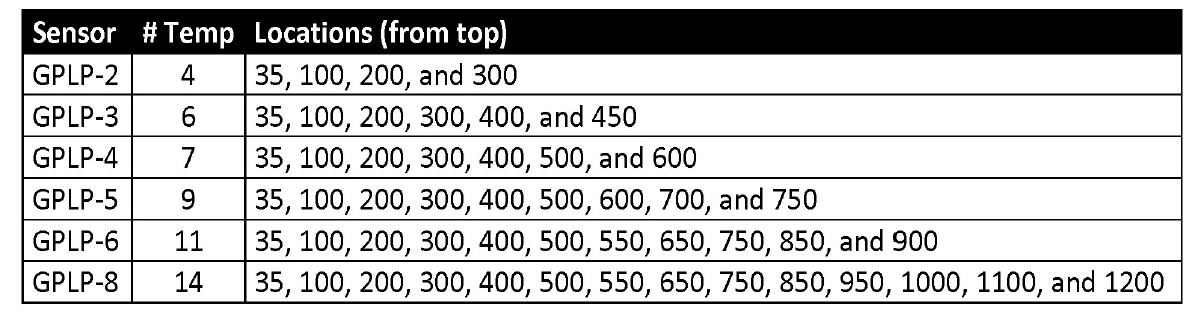

Figure 7.2: GroPoint Temperature sensor depths. We use GPLP-4 with seven temperature sensors. (GroPoint Profile User Manual, Page 6)

The logger data has been modified before import into R. The file names have been changed and column headers have been added. Missing values stored as “Error” have been removed.

Columns headers have assigned with variable name, depth, and unit of depth separated by an underscore.

Loading the data and modifying it is performed with readr and dplyr.

# list of files

datasheets <- list.files(path, full.names = TRUE)

# loading in the data, with an ID column 'source'

data <- read_csv(datasheets, show_col_types = F, id = "source")

# translating the source text to logger ID

data$site <- data$source %>% str_remove(path) %>% str_remove(".csv") %>%

str_split("_") %>% map(1) %>% unlist()

# removing the source column

data <- data %>% select(-source)

# removing duplicates

data <- data %>% distinct()Data preview

We need to force the date time column into the correct format. We can do this with the following command. (Please note, this has been done for region: United States this code may need to be adjusted to fit your regional settings)

7.2.2 Data Re-structuring

For ease of use in R, we will re-structure the data to be in “tidy” format (Read more: (Wickham 2014)).

# grab the measured variable column headers

mea_var <- colnames(data)[3:15]

# melt (tidy) the data by datetime and site.

data_melt <- data %>% melt(id.vars = c("datetime", "site"),

measure.vars = mea_var)

# parse out the variable

data_melt$var <- data_melt$variable %>% str_split("_") %>% map(1) %>%

unlist()

# parse out the depth of measurement

data_melt$depth <- data_melt$variable %>% str_split("_") %>% map(2) %>%

unlist()

# remove duplicates

data_melt <- data_melt %>% distinct()Data preview:

7.2.3 Summarize to Daily Means

As SWAP is a daily time step model, we do not need the hourly resolution, and can therefore simplify our analysis. We first need to parse out a (daily) date column

And then group the data by this date, followed by an averaging function.

daily_data <- data_melt %>% group_by(date, var, depth, site) %>%

summarise(value = round(mean(value, na.rm = TRUE), 1), .groups = "drop_last") %>% ungroup()Additional notes:

The values are rounded to one decimal place, as this is is the precision limit of the Logger.

The averaging is performed per date, variable, depth, and site

The functionality of

.groups = "drop_last"is unknown to me, however it does not change anything other than quieting a warning message.The data is “ungrouped” at the end, to avoid problems with plotting the data (with plotly) later on.

Data preview:

7.2.4 Including Air Temperature and Precipitation Data

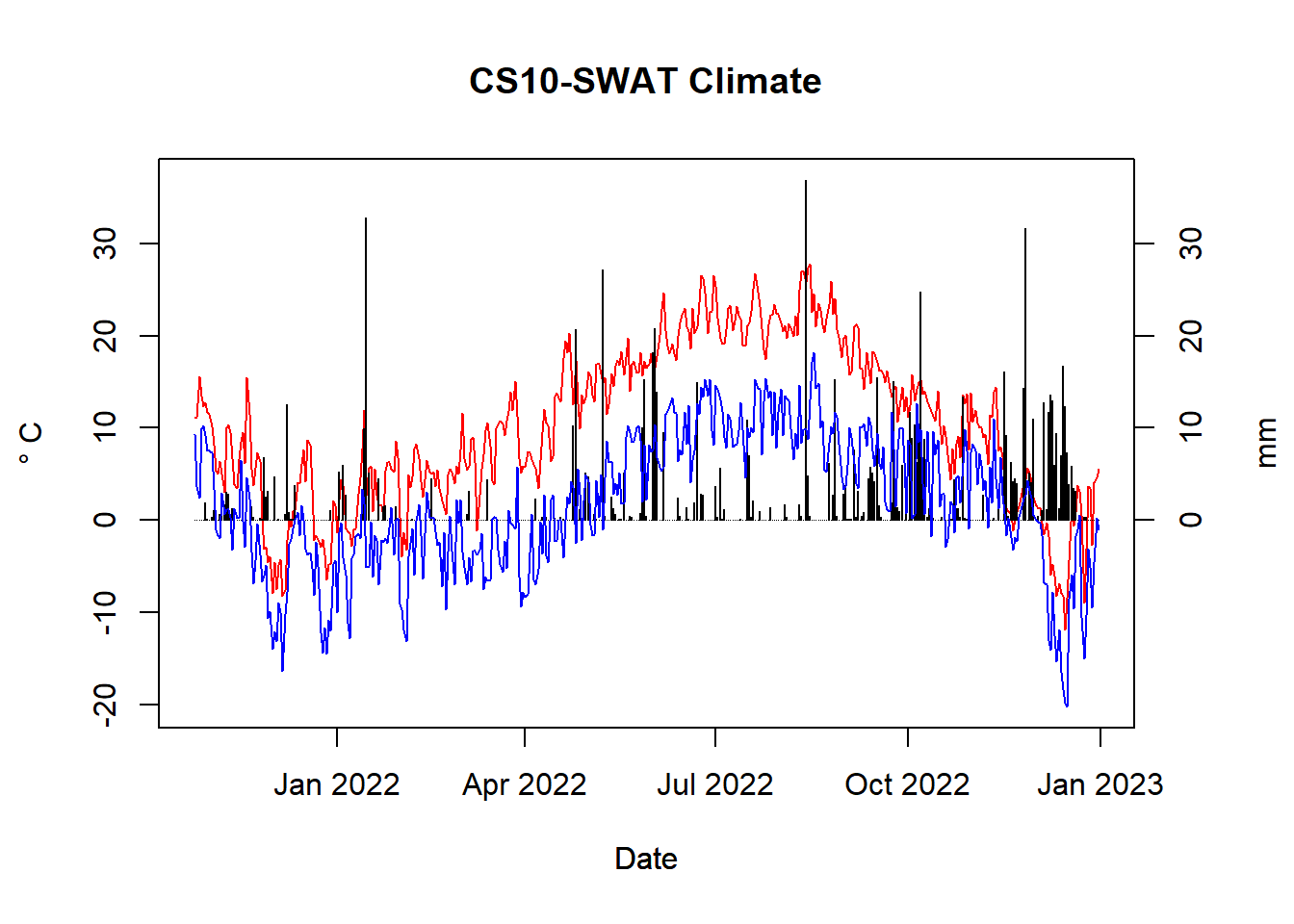

We are going to add the temp / precipitation data from out SWAT+ setup to give us insight on temperature and moisture dynamics for the data quality check.

Also important to note, is that all this code is only necessary, because of the strange format SWAT+ uses for its weather input. If it would just use a standard format (like everything else) then all this would not be needed.

Reading in the SWAT source precipitation data:

swat_pcp <- read.table("model_data/cs10_setup/optain-cs10/sta_id1.pcp", skip = 3, header = F, sep = "\t") %>% as_tibble()

swat_tmp <- read.table("model_data/cs10_setup/optain-cs10/sta_id1.tmp", skip = 3, header = F, sep = "\t") %>% as_tibble()Data preview:

Parsing out the first and last day and year.

FIRST_DOY <- swat_pcp[1,][[2]]-1

FIRST_YEAR <- swat_pcp[1,][[1]]

LAST_DOY <- swat_pcp[length(swat_pcp$V1),][[2]]-1

LAST_YEAR <- swat_pcp[length(swat_pcp$V1),][[1]]Converting these to an R format. Note that R uses a 0-based index only for dates. (very confusing!).

first_day <- as.Date(FIRST_DOY, origin = paste0(FIRST_YEAR, "-01-01"))

last_day <- as.Date(LAST_DOY, origin = paste0(LAST_YEAR, "-01-01"))

date_range <- seq(from = first_day, to = last_day, by = "day")Creating a dataframe from the source data

And filtering it to only cover the same range as our logger data.

And we do the same for temperature

FIRST_DOY <- swat_tmp[1,][[2]]-1

FIRST_YEAR <- swat_tmp[1,][[1]]

LAST_DOY <- swat_tmp[length(swat_tmp$V1),][[2]]-1

LAST_YEAR <- swat_tmp[length(swat_tmp$V1),][[1]]

first_day <- as.Date(FIRST_DOY, origin = paste0(FIRST_YEAR, "-01-01"))

last_day <- as.Date(LAST_DOY, origin = paste0(LAST_YEAR, "-01-01"))

date_range <- seq(from = first_day, to = last_day, by = "day")

tmp_data <- tibble(date = date_range,

tmp_max = swat_tmp$V3,

tmp_min = swat_tmp$V4)

tmp_data <- tmp_data %>% filter(date %in% daily_data$date)

Figure 7.3: Temperature range and precipitation for CS10

Note: this does (yet) include data from 2023, even though the loggers do.

7.3 Data quality check

In this section we are proofing the quality of the data.

7.3.1 Logger 600409 “Deep Rocky Sand”

Figure 7.4: Logger 600409

7.3.1.2 Soil Moisture Content

- First few days need to be removed

- 150mm depth seems usable before Sept2022 – However Attila (instrument installer) mentioned this sensor might be in the air (which would explain low moisture levels), so potentially useless

- 350mm depth seems usable, breaks around/after Sept2022

- 450mm depth seems useless, constantly getting wetter for an entire year, spiking post Sept2022

- 600mm depth seems useless, constantly getting wetter for an entire year, spiking post Sept2022

- 750mm depth seems useless, constantly getting wetter for an entire year.

- 900mm depth seems useless, constantly getting wetter for an entire year.

7.3.3 Logger 600411 “John Ivar Lower Area”

Figure 7.6: Logger 600411

7.4 Data Cleanup

7.4.1 General cleanup

Removing the first few days of the simulation

To be on the safe side, I am setting the logger cut-off date to XXXXXXXXX This could be tuned “per logger” later on if one feels confident.

And we will do the same for the climate data

# TODO dont actually do this.

pcp_data_clean <- pcp_data %>% filter(date > start_date)

pcp_data_clean <- pcp_data_clean %>% filter(date < cut_off_date)

tmp_data_clean <- tmp_data %>% filter(date > start_date)

tmp_data_clean <- tmp_data_clean %>% filter(date < cut_off_date) We will remove the 150 mm depth SMC measurement, as it was not submerged properly in the soil, and is therefore of little use. The temperature reading might be useful, so we will keep it.

7.4.2 Logger specific cleanup

7.4.2.3 Logger 600411

Logger 600411 needs temperature and SMC data removed from 2022-04-21 to 2022-05-10. It seems like the logger was removed from the soil.

# define time range to remove

remove_dates <- seq(from = as.Date("2022-04-21"),

to = as.Date("2022-05-10"),

by = "day")

# set values in that site and date range to NA

data_clean <- data_clean %>%

mutate(value = case_when((site == "600411" &

date %in% remove_dates) ~ NA, .default = value))Figure 7.8: Logger 600411, cleaned data